Why Organizations Still Use Batch Jobs

Most companies process data on schedules. You set a job to run at 11 PM. Data flows in. Warehouse updates. Tomorrow morning, reports are ready. It’s predictable. Infrastructure is stable. Your ops team sleeps.

Then something breaks and you realize the entire decision-making apparatus runs 18 hours behind reality.

Batch processing dominated because it was cheap to build and operate. Storage was expensive. Network bandwidth mattered. Running compute jobs continuously seemed wasteful. So the industry standardized around nightly runs, weekly reconciliations, monthly closes. Good enough became the standard.

That standard is now a liability.

The Technical Gap

A fraud transaction processes in milliseconds. Your batch job runs in 8 hours. The detection happens retroactively. Money’s already gone. System flagged it but nobody was there to stop it because the check happened after everyone left for the day.

Retailers see shopping carts abandoned in real-time but analyze behavior tomorrow. By tomorrow, customer is gone. Competitive window closed.

Manufacturing facilities have sensors transmitting data continuously but maintenance schedules run on monthly analysis. Equipment fails at 2 PM on a Tuesday. Maintenance team sees the problem Thursday morning at standup. Production line sits idle.

The technical issue is straightforward: batch systems introduce artificial latency into decision loops. Not because the analysis takes time. Because someone scheduled the analysis for later.

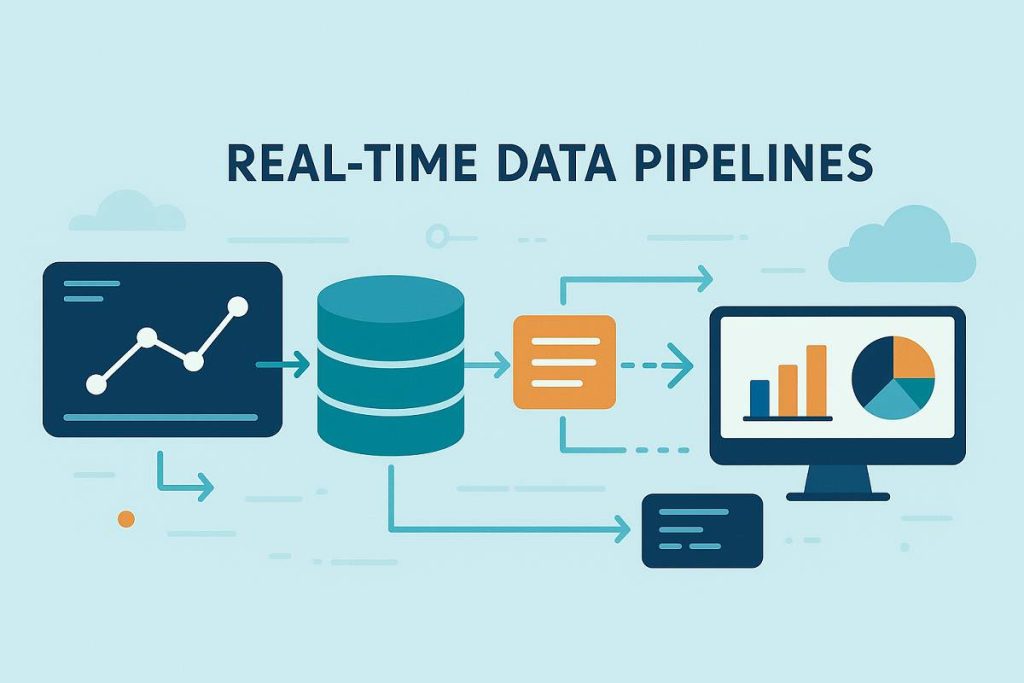

How Stream Processing Actually Works

Streaming systems don’t wait. Events arrive. Processing begins immediately. Results available instantly to downstream systems.

This requires different architectural thinking. You’re not building a sequence of jobs that run once daily. You’re building continuous computational graphs where data flows through continuously.

The Ingestion Problem

Getting data into processing systems at scale creates its own challenges. Raw event volume from modern applications exceeds what traditional databases can ingest. A moderately trafficked web application generates thousands of events per second. A financial exchange generates hundreds of thousands.

Apache Kafka addresses this through a pub-sub model. Applications publish events. Processing systems subscribe. Kafka itself acts as a durable buffer. If the downstream processor fails, Kafka retains messages until they’re consumed. No data loss during outages.

- The practical issue: Kafka requires operational expertise. Partition management, rebalancing, monitoring lag—these aren’t set-and-forget concerns. A misconfigured partition strategy bottlenecks your entire pipeline. Consumer lag grows exponentially during peak hours. You discover the problem when SLA breaches start appearing.

Managed alternatives like AWS Kinesis handle infrastructure but introduce cost and reduce flexibility. Tradeoff between operational burden and control.

Stream Processing Realities

Processing events one-by-one sounds simple until you encounter real constraints:

A user session spans multiple events. You need session state—what products they viewed, items in cart, previous purchases. Where does this state live? RocksDB? Redis? Each choice has implications for throughput, failover behavior, memory consumption.

Events arrive out of order. Mobile user clicks something at 2:01 PM but network delay means Kafka receives it at 2:03. Your session state already closed. Do you reopen? Update historical results? Each decision cascades through downstream systems.

Processing must be idempotent. If your system crashes and replays events, same calculation runs twice. Output can’t double-count. This seems obvious until you try implementing it at 100k events per second.

Apache Flink handles many of these concerns through sophisticated checkpoint mechanisms and exactly-once semantics. But “handles” means the framework does the work, not that problems disappear. You still need to understand what’s happening.

Spark Structured Streaming offers similar guarantees with simpler operational model. Tradeoff: latency requirements. Spark batches internally (micro-batches) so true sub-100ms latency becomes harder. Acceptable for many use cases. Unacceptable for others.

Storage Complications

Where does processed data live? Old data warehouses weren’t built for continuous updates. Teradata and Vertica can technically accept streaming inserts but performance degrades. Query times extend. Schema modifications become risky.

Modern warehouse architecture (Snowflake, BigQuery, Databricks) handles real-time ingest differently. Built on cloud object storage. Columnar format enables fast queries even with frequent updates. Schema flexibility baked in.

Cost structure changes though. Compute pricing shifts from predictable nightly batch runs to continuous consumption-based billing. You save money during low-traffic periods but pay more during peak times.

Implementation Patterns in Production

Financial Services Approach

A bank’s fraud operation looks different when processing transactions in real-time versus nightly:

Batch system: 40-50% of fraudulent transactions caught after post-hoc analysis. Customers dispute charges. Chargeback process follows. Months of back-and-forth.

- Real-time system: Transaction scoring within 200ms of request. Decision gates the transaction if risk exceeds threshold. Customer attempts purchase, gets declined, tries different card. Fraud prevented before damage.

Implementation requires different ML model architecture. Batch systems train on historical data weekly. Models capture aggregate patterns but miss current trend shifts. Real-time systems retrain continuously, sometimes hourly, to adapt to evolving fraud patterns. This creates operational complexity: model versioning, A/B testing infrastructure, rollback procedures.

A production system managing this needs canary deployments for model updates. Serve new model to 5% of traffic. Monitor for unexpected behavior. Gradually increase percentage. Monitor full rollout for 24 hours before committing.

E-Commerce Reality

Recommendation engines illustrate the technical-to-business translation:

Batch system generates recommendations nightly. User visits next morning, sees suggestions based on yesterday’s activity. Useful sometimes. Often dated—especially for fast-moving catalog categories.

Real-time system updates recommendations on every event (page view, click, add-to-cart). When customer lands on homepage, system already knows their last 20 minutes of behavior. Recommendations reflect current context.

Technically this means maintaining user profiles in a distributed cache (Redis cluster typically). Updating profiles on every event. Serving recommendations from cache (< 50ms latency requirement). Synchronizing between real-time processor and cache layer.

- Failure mode: cache eviction during peak traffic. User activity spikes. Cache fills. Older profiles get evicted. Recommendation falls back to generic “popular items.” Conversion rate drops during peak periods—exact wrong time for it to happen.

- Solution adds complexity: distributed cache with replication, circuit breakers on cache failures, fallback recommendation strategies. Each layer is another place where problems hide.

Manufacturing Monitoring

Equipment monitoring combines high-frequency data with operational constraints:

Sensors report readings every 5-10 seconds. Over days, thousands of readings per device. Over hundreds of devices, terabytes of timeseries data. Pattern detection in real-time becomes computationally expensive.

Most implementations use feature extraction: calculate rolling statistics (mean, variance, deviation from baseline) in the stream. Feed aggregated features to anomaly detection model. Triggers alert if anomaly score exceeds threshold.

- Practical issue: false positives. Sensor noise creates alerts for normal behavior. Manufacturing team starts ignoring alerts. When real failure occurs, alert gets dismissed. System becomes liability instead of asset.

Tuning thresholds requires domain expertise. Too aggressive, too many false alerts. Too lenient, misses problems. And thresholds drift—equipment ages, operating conditions change seasonally, baseline behavior shifts over time. Static thresholds become obsolete.

Good implementations retrain anomaly models periodically, incorporating recent operational data. Another operational complexity layer.

Cost Structure Breakdown

Real-time infrastructure costs differently than batch:

- Batch systems: High capital upfront for compute infrastructure. Predictable operating costs. $200k one-time infrastructure investment, $50k yearly operations.

- Streaming systems: Lower capital, consumption-based pricing. $100k initial setup, but $200k+ yearly for cloud infrastructure running continuously. Scales with data volume.

For organizations processing modest data volumes (terabytes, not petabytes), streaming becomes more expensive. For massive scale (Facebook, Netflix, Uber scale), real-time becomes cheaper because batch operations would require enormous nightly clusters.

The break-even point varies by industry and use case. Financial services breaks even immediately (fraud prevention ROI). E-commerce breaks even within months (conversion improvement). Data science analytics might never break even—doesn’t require sub-minute latency.

When Real-Time Doesn’t Make Sense

Not every data problem needs streaming:

Historical analysis (last 5 years of trends) doesn’t benefit from real-time. Batch is fine. Monthly reporting and reconciliation works perfectly on batch. No business requirement for daily refresh. Exploratory data science benefits from batch (easier debugging, reproducibility). Compliance reporting can run on batch schedule.

- Real-time makes sense when: decision window is minutes or less, competitive advantage requires speed, business impact scales with latency reduction.

Technical Skill Requirements

This is where streaming projects commonly stumble.

- Building batch pipelines: SQL skill level. Learn the framework basics. Set it running. Monitor overnight.

- Building streaming pipelines: Need people who understand distributed systems, fault tolerance, handling out-of-order data, stateful computation. Requires fundamentally different mental model from batch processing.

Team that’s competent with Hadoop and Hive will struggle with Flink. Concepts don’t transfer directly. Windowing operations, exactly-once semantics, checkpoint mechanisms—none of this exists in batch world.

Hiring experienced streaming engineers is difficult. Market is small. Compensation requirements exceed batch engineers. Training existing team takes 6-12 months of production incident learning.

Most failed real-time projects fail here, not on technology choice.

Migration Path That Works

- Pick one high-value use case, not everything

- Build proof of concept in parallel with existing system

- Run both systems simultaneously, compare outputs

- Shift traffic gradually (10%, 50%, 100%)

- Only retire batch system after validation period

- Apply same pattern to next use case

This takes longer but prevents catastrophic failures. You discover architectural problems in PoC stage. You catch subtle bugs during parallel running. You validate business benefit before full commitment.

Conclusion

Nowadays, real-time processing has become essential in Data Engineering Services. It handles problems that batch systems create poorly, mainly the latency issue. When you need decisions made faster, real-time wins. When you can wait until tomorrow morning, it probably doesn’t matter.

But here’s the thing: real-time isn’t a technology problem you solve once. You’re changing how your whole team thinks about data, changing your infrastructure, changing your operations. It’s harder than batch. Requires different people. Different tools. Different monitoring. Different headaches at 3 AM.

Streaming architecture makes sense for specific scenarios. Financial fraud? Absolutely. Personalization? Yeah, that works. Equipment alerts before it breaks? Yes. Monthly reconciliation reports? No, batch is fine. Daily analytics dashboard? The batch is still fine.

The mistake most organizations make is treating real-time as a checkbox. “Let’s go real-time because everyone’s doing it.” That’s backwards. Real-time solves latency problems. Does your problem actually have a latency problem?

Start here: measure what you actually need. How fast do decisions need to happen? Minutes? Seconds? If it’s days or hours, batch does the job. If it’s seconds or real-time, you have your answer.

Uncertain? Run both systems simultaneously. Keep batch running. Deploy real-time alongside it. Compare the outputs. Track whether real-time decision actually changes business outcomes. Track whether the extra cost is worth the improvement you’re seeing. Six months of parallel running costs money but saves you from a three-year regret. Stop guessing. Measure instead. Real-time data platforms work when they solve actual business problems. They’re expensive and complicated when they don’t.